This is Part 2 of the Time Intelligence Economy series.

AI is entering every layer of modern work, from writing and coding to support and fraud detection. Across surveys and reports, usage is already mainstream in knowledge work and inside organizations, not a niche experiment anymore 1 2. I do not want to debate whether AI is useful. It clearly is. The question that matters is structural: where AI belongs, where it does not, and what this implies for how humans must evolve to stay competent and responsible.

Most failures around applied AI come from confusion about what work actually is, and therefore confusion about which parts of work can be abstracted safely. To reason clearly about the boundary between humans and AI at work, we need first principles: the structural differences between human and AI intelligence, and the structural definition of work itself. Once those two are clear, the rest follows.

We will start by describing humans and AI as different kinds of systems, without moralizing. We will then define work as a structured engagement with reality. Finally, we will see what must stay human as a consequence of that structure, especially in the years where execution becomes cheaper but responsibility stays human.

We will not go into the implementation details of AI, training models, neural networks, and such, since intelligence can be implemented in many ways. Instead, we will focus on the structural properties that arise from the difference between humans and AI as systems.

Humans and AI as different kinds of systems

Humans and AI can produce outputs that appear similar. They can write text, generate code, summarize information, and propose plans. But similarity of output hides a deeper difference. Humans and AI are different kinds of systems, and that difference matters because work is more than just producing output. Work is a serious act of engagement with reality, while reality responds.

The most important tenet of the difference in humans and AI is that humans have lived experience. Humans live inside the same world they act upon. They experience consequences directly. Their judgment is shaped by stakes, risk, responsibility, loyalty, fear, pride, shame, and the obligation to answer for outcomes. These seemingly decorative traits, are a part of the mechanism by which decisions are made under uncertainty.

AI systems do not have lived experience. They do not inhabit consequence. They do not carry moral weight or responsibility. Their knowledge is derived from patterns in human generated data, not from participation in the world those patterns describe. They can represent empathy and compassion in language, but they do not feel it. This might sound like a moral critique, but it is a structural property.

This distinction becomes clearest when stakes are high. Imagine reviewing a head of state’s speech before it is delivered. One sentence can trigger diplomatic consequences, market movement, unrest, or violence. The review process becomes slow, cautious, and multi perspective because the reviewer will live in the world shaped by that speech.

An AI system can help generate alternatives, flag ambiguity, and highlight risks. But it does not feel the gravity of consequence. It does not have a reputation to lose, a family to protect, or a nation to answer to. That does not make it useless. It makes it unsuitable as final authority.

Another important tenet of difference is the breadth of operation. AI can retrieve and synthesize information across many domains quickly, and it can generate many competing interpretations of the same prompt. Humans can also retrieve, research, and learn, but they are limited by time, attention, and memory, and they typically build understanding through slower iteration.

| Property | Humans | AI systems | What it implies for work |

|---|---|---|---|

| Lived experience | Lives inside reality, feels consequences | Does not live consequences, models reality indirectly | Humans should own decisions where consequences matter |

| Knowledge breadth | Limited, local, embodied | Vast, non-local, pattern-based | AI excels at recall, synthesis, and rapid variation |

| Uncertainty handling | Can hesitate and sense unknowns | May produce confident outputs under ambiguity | Require explicit boundaries, grounding, and human review |

| Accountability | Can be responsible, blamed, praised | Cannot hold moral debt or be accountable | Authority cannot be delegated, only execution can |

| Empathy & compassion | Experienced internally | Can emulate in language but not experience | AI should not be final moral decision-maker |

| Speed & scale | Slower, limited attention | Fast, scalable, tireless | Delegate repetitive, well-scoped execution to AI |

We will return to these properties later. Now lets try to define what work is.

Work as engagement with reality

Most definitions of work are social. They revolve around job roles, deliverables, output, and effort. Those definitions are useful, but they are not fundamental. A fundamental definition should be independent of company, industry, and tools.

Work is the act of engaging with reality in order to intentionally change it.

Reality exists regardless of whether we understand it. Some call it truth, some call it mathematical axioms, some call it simply “how things are”. In this essay, we will call it reality. It is a structure of the world that nobody can negotiate with. The Truth.

Work begins when somebody, or something, chooses to observe reality, build an understanding of it, decide what should change, and act to move reality closer to that intention. This definition covers software engineering, science, governance, medicine, business strategy, and personal development. The surface artifacts change. The structure does not.

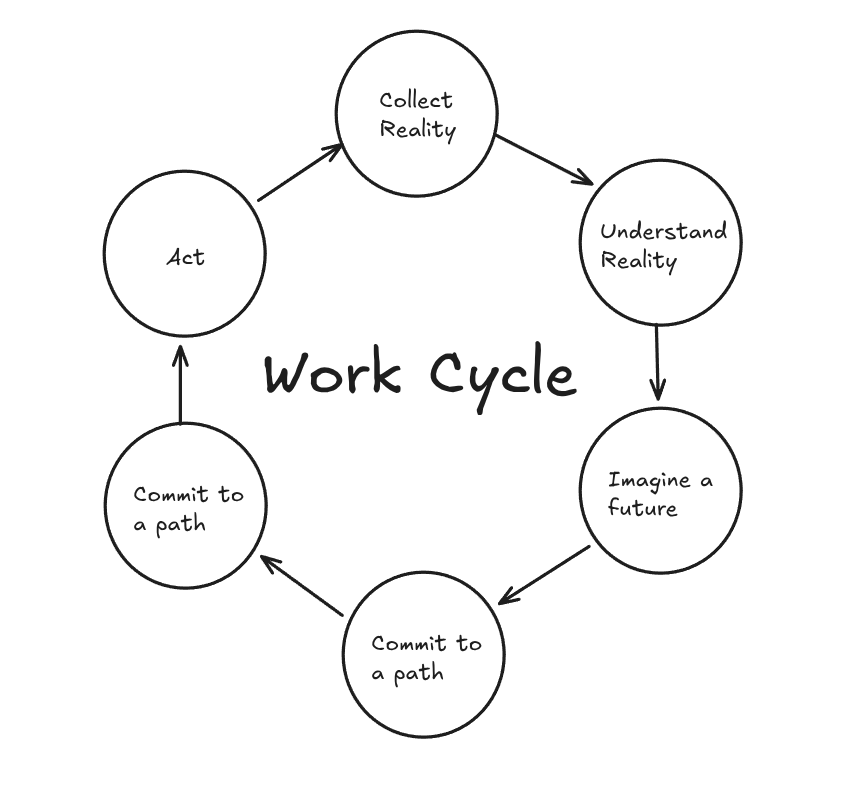

The structure of work

If work is engagement with reality, then it necessarily follows a few phases. These phasees arise because reality itself imposes them. You can compress them, rename them, or obscure them, but attempts to remove them break the loop.

What follows is the minimal structure that any serious work, across domains, passes through.

-

Collect reality Work begins with contact. Reality continuously emits signals: facts, constraints, measurements, feedback, events. The first obligation of work is to collect these signals without distortion. Logs, metrics, financial statements, user complaints, market movements, physical sensations, all of these are acts of collection. This phase is deliberately non interpretive. It merely records what is present.

-

Understand reality Collection alone is useless without structure, because data can be subjective. Understanding is the act of compressing data into models that allow shared reasoning. This involves making growth charts of monthly recurring revenue, mapping donation flows against campaigns, building causal diagrams of system outages, or drafting mental models of user behavior. Modeling is an attempt to make reality legible to an intelligent being, human or machine, without losing the essential shape of what is true.

-

Judge reality Understanding does not yet create direction. Direction enters through judgment. Judgment is where values are applied to reality as it is understood. What is acceptable? What is broken? What is risky? What matters most? These questions cannot be answered by data alone, because data has no preferences. Choosing reliability over speed, serving one class of users over another, or accepting certain risks in exchange for others are all value judgments. Without judgment, work has motion but no direction.

-

Imagine a future Imagination is the phase where work escapes the gravity of the present. It can be optimization, a rewrite, or a radical innovation. It is the deliberate proposal of a future that does not yet exist but is worth pursuing. This phase asks a different class of question: what could be different, and what should be different. Proposing a shift from reactive support to proactive education, redesigning a feature to solve the deeper problem users have been working around, or choosing to rebuild infrastructure before it becomes a crisis, these are acts of imagination.

-

Commit to a path A plan is a map of “commitment under uncertainty”. Commitment is the point at which tradeoffs become real and opportunity cost is accepted. By committing to one path, other paths are deliberately abandoned. This is where responsibility becomes concrete. Deciding to invest engineering time in scaling infrastructure instead of new features, committing to a six week timeline that forces hard scoping decisions, or choosing to sunset a product line to focus resources elsewhere, these are moments of commitment.

-

Act Action is where theory is exposed to consequence. Building, testing, deploying, intervening, speaking publicly, shipping a new API version, running a database migration, or publishing a policy change, all action modifies reality, even when it fails. Action without prior commitment produces activity but not progress. Action with weak understanding produces damage. In serious work, action is not celebrated for its own sake.

-

Reality responds Reality always answers back. Sometimes clearly, sometimes painfully. Outcomes, side effects, unintended consequences, and second order effects all belong here. Users adapt to the new feature in unexpected ways, a drug starts showing unknown side effects, a database migration causes latency spikes you did not anticipate, the policy change triggers public backlash, or customers start churning for reasons you did not model. This is reality reasserting itself. The response becomes new input, and the cycle restarts. Work does not terminate. It only stabilizes temporarily, until reality shifts again.

With this structure, we can put the nature of humans and AI into context and something like this emerges:

| Phase | Nature (core faculty) |

|---|---|

| Collect reality | Sensing (AI dominant) |

| Understand reality | Modeling (Human dominant with AI help) |

| Judge reality | Valuation (Human dominant) |

| Imagine alternatives | Imagination (Human dominant) |

| Commit to a path | Commitment (Human dominant) |

| Act | Execution (AI dominant) |

| Reality responds | Feedback (AI dominant) |

This mapping falls out of the earlier distinction between systems that live inside consequence and systems that only model it. The phases that bind the future, valuation, imagination, and commitment, are exactly the phases that create moral and organizational obligation. They decide what is acceptable, what is worth building, and which tradeoffs the system will stand behind when reality responds. Obligation requires an accountable agent, because obligation is the thing you are blamed or praised for. That closes the loop back to lived experience: the right to decide is inseparable from the requirement to live with the consequences of the decision.

What must stay human

Once work is understood as engagement with reality, the answer follows from structure. Work changes reality. Changing reality creates consequences. Someone has to live with those consequences. AI can participate in work, but it does not live in the world it changes. It does not carry the outcome of its decisions forward. Humans do.

AI does help tremendously to compress time in phases that transform information, explore possibilities, and execute within constraints. But the phases where values are applied, commitments are made under uncertainty, and consequences are carried forward, they must remain human.

Authority emerges where decisions bind the future and create obligation. This means, authority belongs to the kind of agent that can actually pay the price of being wrong in the world it is changing.

As a result, human expertise cannot remain centered on execution. It moves toward judgment, commitment, and ownership of outcomes.

The shift in rigor: from execution to judgment, models, and measurement

For the last few decades, in many professions, expertise was strongly coupled to execution. The expert was the person who could reliably produce correct output, under constraints and deadlines, by applying technique with care. In engineering, this meant writing correct scalable code, designing systems carefully, and debugging effectively under pressure. In analytics, it meant writing correct queries, building reliable pipelines, and interpreting results responsibly. In writing, it meant drafting clearly and editing precisely.

AI changes the economics of execution. It makes certain forms of output cheap and fast. This means, the human rigor in these areas, will need to relocate, for humans to be most efficient with their time and energy.

The failure mode has gone from “you cannot execute” to “you can execute too easily without noticing that you have lost contact with reality”.

When execution becomes cheap, breadth grows and depth of rigor often falls, so systems must enforce measurable guarantees of quality.

This matters for humans because it changes where leverage lives. In a world where output can be generated quickly, the person who creates the most value is the person who can keep the work cycle honest. The person who can form correct mental models, notice when mental models are wrong, make good value judgments under uncertainty, and define measurable constraints so that speed does not quietly degrade quality. This is where “10x” starts meaning something real again: durable trajectory, not just fast output.

This is what falls out of the structure we defined above. If AI compresses execution, then human advantage concentrates in the phases AI cannot own.

The human role shifts toward three kinds of rigor that become non negotiable:

Rigor in mental models

Understanding reality is the phase where mental models are formed. If your mental model is wrong, downstream execution merely makes the wrong thing faster.

AI is an eloquent flatterer. It can generate hypotheses and propose explanations, but it can also make wrong mental models feel persuasive because it is fluent. Fluency is not truth.

In the AI era, an expert is not just someone who can implement. An expert is someone who can maintain a correct mental model of the system, notice model drift, and update mental models when reality responds differently than expected.

This is why I think the discipline of system thinking becomes more important with AI. Work still begins with reality, and reality still punishes wrong assumptions. If you use AI to perform data analysis, the model you are using is not only your model of the business, but also the implicit model encoded by the queries, filters, and joins that produced the results. If those are not visible, you cannot evaluate the model. You are left with a story. This is why serious AI use in analytics must demand the chain of evidence: the exact queries used, the dataset version and time range, and the transformation steps applied. Without that trace, modeling power becomes a liability, because fluent wrongness looks like insight.

Rigor in judgment

Judgment is where values, risk tolerance, and priorities enter. AI can only guess values. It can emulate value language, but it does not own the moral or monetary weight of tradeoffs.

This is where human expertise must become sharpest. As AI produces more options, the scarcity shifts from options to choice.

In a world of infinite suggestions, judgment becomes the bottleneck.

An expert must be able to say: this is acceptable, this is not. This risk is tolerable, this risk is not. This tradeoff aligns with what we stand for, this one does not. This is the future worth building, this is noise.

Without strong judgment, AI accelerates drift. You can see this even in something as simple as customer support. A support model can generate responses that sound polite, complete, and confident. But the decision of what the company is willing to commit to, which edge cases are exceptions, and where trust is prioritized over short term efficiency is not a language generation problem. It is valuation. If you allow AI to blur that line, we get a system that can create commitments the organization cannot honor, and we only discover the damage when reality responds through churn, escalations, and broken trust.

Rigor in measurement and signals

Executional rigor used to live inside the act of doing. When execution is abstracted, correctness must be proven externally.

This is where many teams will struggle during the transition. You must have already heard something like:

I don’t know, Cursor did it.”

If you do not build quality measurement into your workflow, AI will produce plausible output that quietly degrades quality. The degradation might not show up immediately. It will show up later as outages, churn, security incidents, and compounding confusion.

The antidote is to require traceability and signals as first class constraints. If AI is helping you produce output, your process must demand the ability to answer: how do we know this is correct?

This question is the new form of rigor. If you use AI to write or modify code, for instance, the appropriate response is not to stare at the diff and hope your intuition catches problems. You mostly won’t, because AI is mostly correct. The appropriate response is to insist on a measurable contract: a test suite that proves correctness, benchmarks that detect regressions, and monitoring that makes failures observable after deployment.

In the transition period, you will still need execution expertise to evaluate code and spot subtle issues. Over time, the frontier shifts. The deeper skill becomes defining tests, invariants, and measurement harnesses so that correctness is enforced by the system, not by heroic manual review.

Rigor in eliminating confident wrongness

It’s stupid to think of hallucinations as a random glitch. It is structural. Even state of the art systems can confidently produce false statements, and standard training and evaluation often reward guessing instead of acknowledging uncertainty 4 5. That is the structural shape of the risk you are working with.

It helps to think about AI as a new hire with absurd range and crazy confidence. You would not trust an extremely talented person in their first week to make critical decisions without supervision, especially in unfamiliar systems. AI is like that permanently. It has breadth without lived context, and it can speak with confidence even when it should hesitate 4.

As humans, we’ve evolved to link confidence and charisma to correctness 9. That instinct is quite deadly here. AI can generate answers that sound clean and complete even when the underlying reasoning is full of shit, the assumptions are hidden, or the claims are ungrounded. A good new hire will often say “I do not know” and ask questions that expose uncertainty. AI will often do the opposite unless you force uncertainty to be explicit.

So the rigor required is not only to trust AI less, but more importantly also to make confidence debuggable. That means insisting on sources, traces, and constraints wherever truth matters. It means building workflows where claims must be backed by logs, citations, queries, or measurements, and where anything ungrounded is treated as a hypothesis. The goal is not to reduce AI usefulness. The goal is to remove the failure mode where fluent wrongness silently becomes reality shaping decisions.

Being “Confidently Wrong” is holding AI back 10

A dedicated case: why I hate self driving cars

There is a class of AI applications where the boundary is not about research or productivity. It is about moral authority. Some uses of AI sit near this boundary, like identity verification, fraud scoring, and medical interpretation. But the cleanest and most widely understood example is self driving cars, because it places machine decisions directly in the path of physical harm. Regulators already treat this as a high consequence area, which is part of why crash reporting requirements exist for automated driving systems and certain driver assistance systems 6.

Most of the time, driving is not morally complex. It is a technical control problem operating within traffic rules and environmental constraints. This makes it tempting to treat self driving as just automation.

But the critical edge cases are not technical in the usual sense. They are moral.

In rare scenarios, a vehicle must make decisions that cannot be reduced to correctness in execution. It must choose between harms under uncertainty. It must decide whose safety is prioritized, what risks are acceptable, and what outcomes are chosen when all outcomes are bad.

People often respond with a simple idea: encode the ethics. But even at the level of public intuition, ethical preferences vary widely by culture and context. The Moral Machine experiment collected 39.61 million decisions from 233 countries and territories and found meaningful cross cultural variation in preferences 7. There is no single obvious moral policy you can implement without taking a side.

Humans already face these scenarios, but when a human faces them, responsibility is legible. There is a person. There is agency. There is accountability. There is a social and legal framework for blame, negligence, intent, and punishment.

When a machine faces them, responsibility becomes diffuse. Who is accountable for the harm?

- The manufacturer who built the system?

- The engineers who trained the model?

- The policy team who defined behavior?

- The regulator who approved it?

- The passenger who did not control it?

- The pedestrian who trusted the environment?

Once responsibility becomes blurry, the idea of crime blurs. Not because harm disappears, but because accountability loses a single locus. This matters because its easy to mistake accountability as a detail of human society. Accountability is actually a mechanism by which moral decisions are constrained. When actions have consequences, and consequences can be traced to agents, behavior is shaped. That loop is part of how society maintains trust.

Self driving systems can plausibly become statistically safer than humans in aggregate, and companies do publish safety claims along those lines 8. But even if a system improves averages, the question still remains: are we willing to grant machine authority in the tail cases where a moral decision is being made under uncertainty, with physical harm on the line?

Even if those edge cases are only one percent, they are the percent that defines whether we accept machine authority over life and death. For me, that is the line.

This is why I am comfortable with AI assisting humans in driving, but not with machines becoming final authority in those moral edge cases. Assistance preserves accountability. Replacement dissolves it.

Finally

AI compresses time inside the work cycle. It makes execution cheap, iteration fast, and synthesis abundant. That is real leverage.

But engaging with reality still imposes phases, and reality still responds.

No matter how strong AI gets, authority over judgment and consequence must remain human, and the rigor that preserves contact with reality must stay intact even as execution is abstracted.

The transition ahead is about moving rigor to where it matters: mental models, judgment, measurable systems, and accountability.

Respect reality. Don’t distort it. Don’t escape responsibility for it. And don’t confuse speed with velocity. That is what will keep expertise real.

If you liked this post and want the new posts from the series delivered to your inbox, you can subscribe to my newsletter.

References

[1] Microsoft Work Trend Index (2024): “AI at Work Is Here. Now Comes the Hard Part”

https://www.microsoft.com/en-us/worklab/work-trend-index/ai-at-work-is-here-now-comes-the-hard-part

[2] McKinsey Global Survey (2024): “The state of AI in early 2024: Gen AI adoption”

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-2024

[3] Stanford HAI AI Index Report (2025 PDF): adoption and investment statistics covering 2024 usage

https://hai.stanford.edu/assets/files/hai_ai_index_report_2025.pdf

[4] OpenAI (2025): “Why language models hallucinate”

https://openai.com/index/why-language-models-hallucinate/

[5] Kalai, Nachum, Vempala, Zhang (2025): “Why Language Models Hallucinate” (arXiv)

https://arxiv.org/abs/2509.04664

[6] NHTSA: Standing General Order on Crash Reporting (ADS and Level 2 ADAS)

https://www.nhtsa.gov/laws-regulations/standing-general-order-crash-reporting

[7] Awad et al. (2018): “The Moral Machine experiment” (Nature)

https://www.nature.com/articles/s41586-018-0637-6

[8] Austin American Statesman (2025): reporting on Waymo safety report and comparative crash statistics in Austin

https://www.statesman.com/business/technology/article/austin-waymo-safety-report-crashes-21246378.php

[9] The consequence of confidence and accuracy on advisor credibility https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1615027

[10] “Being ‘Confidently Wrong’ is holding AI back”

https://promptql.io/blog/being-confidently-wrong-is-holding-ai-back